From Generic LLMs to Marketing-Native Intelligence: How Marketeam.ai Curates Data with NVIDIA NeMo Curator

- Jane

- 5 days ago

- 50 min read

Introduction: From Generic LLMs to Marketing-Native Intelligence

Marketing data is everywhere - ads, analytics, emails, web pages, social posts - and it’s a mess. A small business’s marketing stack might spew out thousands of messy data points and text snippets daily, buried in spreadsheets and dashboards. Generic LLMs like GPT-5 are incredibly fluent, but when asked to make sense of fragmented marketing data, they often stumble. They might produce plausible-sounding campaign plans or copy, yet they lack true understanding of a brand’s metrics, history, and nuance. The result? A Marketing AI that can write a decent tagline, but can’t reason about why last quarter’s ad campaign flopped or how to optimize this month’s budget allocation.

At Marketeam.ai, we faced this gap head-on. We’re a generative AI startup building autonomous marketing agents - essentially an AI “team” of specialists for strategy, campaigns, content, and analytics. We realized early that a generic model alone wasn’t enough for a serious marketing strategy. So we built domain specific LLMs (i.e Markethinking), our marketing-native reasoning LLM, tuned specifically for marketing tasks and data. Those models power our agents (PPC experts, content strategists, social media gurus, etc.) who operate as co-workers, not just co-pilots, handling everything from campaign ideation to real-time performance optimization. To get there, we needed to feed our models with curated marketing intelligence - clean, domain-specific text data covering the breadth of marketing activities.

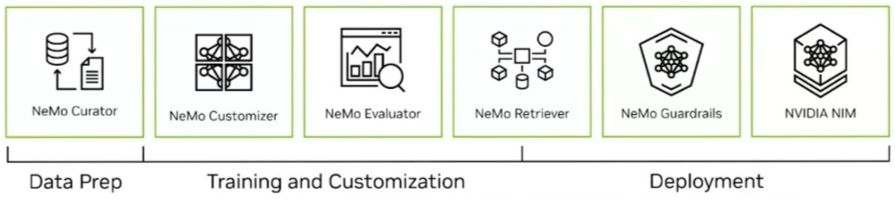

So, we partnered with the NVIDIA Inception program for startups and collaborated with the NVIDIA stack - notably NVIDIA NeMo Curator - to turn our chaotic marketing data lake into high-quality corpora for domain-specific generative AI model training. NeMo Curator is a toolkit for large-scale data curation that accelerates data cleaning, filtering, and preparation for LLMs. By using NeMo Curator, we transformed ad hoc data scripts into a robust pipeline that ingests raw marketing data and outputs AI-ready datasets. This made our process faster and more maintainable, letting us iterate quickly on new data sources and domain rules.

In this blog, we’ll dive deep into why domain-specific LLMs (and even smaller SLMs) make sense for Domain-Specific AI Startups , what unique data challenges we tackle, and how NeMo Curator helps solve them. We’ll walk through the design of our Curator pipeline step by step - from ingesting ads and analytics, to cleaning, deduping, and more, all the way to assembling training corpora for Marketing LLMs. Along the way, we’ll share code snippets and lessons learned. Whether you’re building any specialized AI, we hope our journey with domain adaptation and the NVIDIA NeMo Curator offers useful insights.

Why Domain-Specific LLMs (and SLMs) for Marketing?

Generic LLMs: A Strong Base with Domain Gaps

Large foundation models like LLaMA, Gemma, DeepSeek, and QWEN have undeniably transformed what AI can do out-of-the-box. They come pre-trained on vast swaths of the internet, making them amazingly fluent and knowledgeable in a general sense. We leverage these strengths - Markethinking started from a strong base model. However, when it comes to marketing-specific reasoning, generic LLMs (open & close) show clear gaps. They weren’t trained on your company’s ad conversions or the nuanced language of your brand’s voice.

In practice, we saw generic LLMs hallucinate marketing facts or metrics. Ask a vanilla LLM to interpret a spike in CPC (cost-per-click) from an ad report, and it might fabricate a plausible reason that has no grounding in the actual data. Generic models also struggle with the jargon and context of marketing - terms like AEO, GEO, ROAS, LTV, MQL have specific meanings, and strategies like “retargeting past web visitors with dynamic creative” require domain insight. Without access to real performance data or brand guidelines, a generic model’s advice can be surface-level at best.

The limitations aren’t just factual; they’re also contextual and tonal. Marketing content must align with a brand’s voice and comply with channel policies. A generic LLM might generate a catchy tweet, but it might not align with the brand’s tone, or it could violate a subtle ad policy. In short, foundation models give us a foundation, but for high-stakes marketing decisions and content, we needed to close the gap with domain expertise and data.

Domain Adaptation & Domain-Specific LLMs

The solution for us - and a growing trend in the industry - is domain adaptation: taking a strong general LLM and specializing it with in-domain data. Instead of trying to train from scratch (which is impractical), we fine-tune or further pre-train a model on marketing data for LLM training (campaign texts, strategy documents, analytics summaries, etc.). This yields a domain-specific LLM that internalizes marketing knowledge and better understands the tasks at hand. We essentially teach the model the language and logic of marketing: what a funnel looks like, how ad KPIs relate to each other, how marketers structure a plan, and so on.

This approach mirrors what’s been done in other fields. In finance and healthcare, for example, teams have created models like BloombergGPT and specialized medical LLMs precisely because generic models “don’t understand niche industries [and] their jargon”. BloombergGPT, a 50-billion parameter finance model, was trained on financial data and significantly outperforms general models on financial NLP tasks. Bloomberg’s researchers noted that “the complexity and unique terminology of the financial domain warrant a domain-specific model” - the same holds true for marketing. Our experience echoes this: once an LLM was adapted to a brand marketing data, it stopped giving generic, vague answers and started providing insights that felt like they came from a seasoned marketing analyst.

Crucially, a well-adapted domain model can even outshine larger generic models on in-domain problems. A recent study in the finance domain showed that a fine-tuned 8B model (trained on financial data) outperformed even 70B general models on finance tasks. In other words, smarter beats bigger when the data is right. Domain adaptation doesn’t just boost performance - it also improves reliability. The model is less likely to “guess” because it has seen real examples. It knows the language of the domain in depth, rather than just pattern-matching from surface text. For our customers, this means an AI that doesn’t just sound confident about marketing strategy, but one that actually understands marketing strategy.

SLM for Marketing: Smaller But Sharper

In building Marketeam’s stack, we also explored SLMs for Marketing - smaller language models that are efficient yet tightly domain-tuned. This direction aligns with recent thinking by NVIDIA’s Agents Research article “Small Language Models are the Future of Agentic AI”, which argues that agentic systems rarely need broad, conversational intelligence and instead benefit from specialized models performing narrow tasks repeatedly and reliably. So why go small? For one, smaller models (think tens of millions to a few billion parameters) are cheaper to run and can be deployed in secure, private environments - critical for enterprise compliance. If a smaller model can achieve comparable accuracy on marketing tasks through specialization, it’s a clear win for both latency and cost. Our autonomous agents need to operate quickly (for example, generating a campaign report on the fly during a meeting), and an optimized SLM delivers snappier, more predictable responses than a giant general-purpose model would.

Language models are governed by a layered hierarchy of control mechanisms, ranging from surface-level guidance to deep structural shaping of the model itself.

At the most accessible layer is Prompting, which uses natural-language instructions, roles, and in-context examples to influence model behavior within a single interaction; it is fast, flexible, and reversible, but also the most fragile and least reliable form of control.

Steering operates one layer deeper, using mechanisms such as logit biasing, decoding constraints, safety classifiers, or activation-level interventions to bias the model’s output distribution toward desired behaviors - such as politeness, factuality, or safety - without modifying its learned weights.

Retrieval and Context Injection (i.e. RAG) provides a powerful middle layer by supplying the model with external documents, memories, or tools at inference time, allowing it to reason over up-to-date or proprietary information as if it were part of its own knowledge.

For more durable behavioral change, Fine-Tuning (FT) directly modifies subsets of the model’s parameters so that particular tasks, domains, or styles become intrinsically easier and more natural for the model to perform.

At the deepest level, Training & Alignment - including pretraining, reinforcement learning, and preference optimization - shape the model’s internal representations, values, and reasoning patterns, determining what it can understand, what it prefers to do, and which behaviors are even possible in the first place.

Together, these layers form a continuum from soft, reversible control to hard, foundational control.

Domain-specific training makes these SLMs viable. A narrow focus (marketing) means the model doesn’t waste capacity on everything under the sun - it uses its smaller capacity to become an expert in marketing. After training on our curated corpus, even a relatively compact model starts to demonstrate sharp skills in the marketing domain: understanding industry acronyms, following brand style guidelines, and recalling past campaign outcomes for a brand.

The key is that the NVIDIA NeMo Curator pipeline (which we’ll detail below) supplies high-quality, relevant text for training. High signal, low noise - this allows a smaller model to punch above its weight.

In summary, general LLMs gave us a starting brain, but domain-specific LLMs gave us a brain that thinks like a marketer. Through domain adaptation, Marketeam achieved a level of marketing intelligence and reliability that a generic model alone couldn’t match. And by crafting some smaller specialized models (SLMs), we balanced performance with practicality - deploying models that are right-sized for our customers’ needs without sacrificing the marketing-savvy behavior. All of this, however, hinges on one thing: having the right marketing data pipeline to feed these models. That’s where the marketing data problem - and our solution with NeMo Curator - comes in - the first step in the process of domain adaptation.

The Marketing Data Problem: What We Have to Tame

Marketing Data Sources We Unify

At Marketeam, our domain is marketing, so our data comes from virtually every tool and channel a marketer touches. Part of our innovation is to unify these disparate data sources into one coherent knowledge base for training. Here’s a snapshot of the data we pull together for each brand we support:

Ad performance data: Pay-per-click (PPC) and online ad campaigns provide rich text (ad headlines, descriptions) along with structured metrics (impressions, clicks, CTR, CPC, CPA, conversion rates, ROAS, budgets, etc.). We ingest data from platforms like Google Ads and Facebook Ads - everything from the text of the ads to the targeting parameters and the results.

Web & product analytics: This includes data from Google Analytics, product analytics tools, etc. We get textual descriptions of events (e.g. “Added to cart”, “Signed up for newsletter” steps) and funnel definitions, along with numbers on user behavior. Even though much of analytics data is numeric, there’s context in the names of events, goals, and segments that is valuable as text.

Social media and community content: We collect posts, comments, and engagement metrics from social platforms (Twitter/X, LinkedIn, Instagram, Reddit, etc.) relevant to a brand. The content (text of posts, replies) plus engagement indicators (likes, shares, sentiment) tell a story of how the brand communicates and how the audience reacts.

Content assets & brand guidelines: Every brand has a trove of content - website copy, landing pages, blog articles, email campaigns, product descriptions, maybe even video transcripts or scripts. We gather this textual content along with any available style guides or brand voice documentation. This teaches our model how the brand “speaks” and what messages it emphasizes.

Competitive and market data: Marketing doesn’t happen in a vacuum, so we also ingest external data like competitor ad copy (public ads), industry news, and trending topics (e.g., from marketing newsletters or reports). This gives context to what’s happening in the market, which our model can use to reason (for example, recognizing seasonal events or competitor moves).

Historical marketing data: Perhaps most importantly, we maintain a longitudinal record of what each brand has done and what the outcomes were. This includes past campaigns, A/B tests, seasonal performance trends, etc. Over time, this becomes a gold mine of “what works vs what doesn’t” for that specific domain. Our LLM training uses this history to ground the model in causal, strategic knowledge (e.g., understanding that “50% off” discounts worked last Black Friday but not in the summer sale).

All these sources come in different shapes and formats - CSV exports, JSON from APIs, HTML pages, etc. Part of our job is to extract the textual pieces from them (like ad headlines, post text, email body content) and attach the right context metadata (like “this came from a Facebook Ad in Sept 2025 with X clicks”). We also ensure brand-by-brand isolation - each brand’s data stays separate, to respect confidentiality and to allow the model to develop a unique understanding per brand. The challenge is that once we have this mountain of raw marketing text and data, it’s anything but ready for LLM training.

Why This is Hard for LLM Training

Raw marketing data is not a nice, clean text corpus. It’s a tangled mess that would make an LLM training run scream in agony if we fed it as-is. Here are some of the key challenges we’ve faced in turning this data into something usable:

Fragmentation & Format Variety: Each source has its own format and quirks. Ad data might come with a lot of CSV columns and some free-form text fields (headline, description). Web content might be HTML with boilerplate menus and footers. Social data might include emojis, hashtags, and @mentions. Simply loading and parsing all these consistently is a non-trivial task.

Duplicated and Redundant Content: Marketing content is highly repetitive. The same ad text might be used across 10 campaigns. The website’s footer (“© 2025 Company - Privacy Policy”) appears on every page. Our raw dump of emails might include a standard welcome message sent to 5,000 customers - that’s 5,000 duplicates of “Hi {Name}, welcome to…”. If we don’t deduplicate, our model could get a very skewed sense of what text is common (it might overweight common boilerplate and start babbling about privacy policies in every answer!).

Boilerplate and Low-Value Text: Related to duplicates, a lot of content is just boilerplate or auto-generated junk. Think of things like tracking code parameters (UTM codes in URLs), cookie consent texts, unsubscribe links, or the text of a generic form confirmation. These don’t add useful knowledge for the model; they’re noise. We need to strip away HTML tags, navigation menu text, and other clutter from web pages, and remove low-information pieces.

Inconsistent Data & Metrics References: Marketing data often contains references to metrics or IDs that are contextual. For instance, an analytics report might say “Bounce rate: 47.2%” or an email might include “User ID: 12345”. These raw values aren’t useful for training (and might even be sensitive). Also, the same concept can be named differently across systems (“email” vs “newsletter sign-up” vs “lead capture”). If not normalized, the model might think they’re unrelated. In short, without careful processing, the model could pick up false distinctions or irrelevant facts.

PII and Sensitive Information: Marketing data is full of personally identifiable information - customer names, emails, phone numbers, etc., especially in CRM or email marketing logs. We absolutely cannot let raw PII leak into the training data. Not only would that be a compliance and privacy nightmare, it could also lead the model to memorize or regurgitate personal data. Any solution must identify and remove or anonymize PII at scale.

Quality Variation and Noise: Not all text we collect is high-quality prose. Social media comments might include profanity or random gibberish. Some auto-generated content might be grammatically broken. If we train on everything, the model might learn bad habits (e.g., picking up spammy sales language or even toxic language from an angry Twitter reply). We need to filter out the “garbage” - both for ethical reasons and to keep the model’s output professional.

Imbalanced and Biased Samples: One brand might have 100× more ad copy than another. Some campaigns (like a major product launch) could dominate the corpus while other subtle but important things (like internal strategy documents) are few. If we naively throw it all in, the model might underrepresent the quieter but crucial aspects (e.g., strategy reasoning) and overrepresent flashy ad slogans. We have to carefully balance and sample so that the model learns a well-rounded view of marketing tasks.

In essence, the raw data is rich but raw - it contains the knowledge we need, but in a form that’s unsuitable for direct ingestion by an LLM. Without curation, training on this data could lead to a model that is confused, biased, or even leaking sensitive info. We needed to tame this wild data into a structured, clean marketing corpus.

What We Want Instead: A Curated Marketing Corpus

Our endgame is to have a curated marketing corpus that is AI-ready. That means:

Cleaned and normalized text: All the textual data (ad copy, content, social posts, etc.) should be in plain text form, free of HTML tags, extraneous whitespace, weird encoding artifacts, and boilerplate. A sentence from a landing page should read like normal prose, not like a web scraper dump.

Deduplicated content: Each unique piece of text or information should ideally appear once (or at least not an overwhelming number of times) in the training set. If two ads have the same headline, we keep it once. If a blog post was updated, we might keep only the latest version. This way, the model isn’t overfitting to duplicates.

Relevant and high-quality text: Through filtering, we want to remove spammy content, irrelevant pieces, or extremely low-information bits. The corpus should mostly contain meaningful marketing language: persuasive copy, strategic narratives, informative analysis. By weight, the majority of tokens the model sees should be “good” tokens (domain-relevant and well-formed).

Properly labeled and segmented data: The curated dataset isn’t just a blob of text. We attach metadata or structure where helpful. For example, we might tag a piece of text as “Ad Headline” vs “Blog Body” vs “Tweet” so that we can later ensure the model is exposed to a mix of contexts. We might also maintain per-brand segmentation so that during fine-tuning we can do some brand-specific adaptation without overlap. Having this structure also helps in evaluation - we can create test sets for each task (e.g., a test set of “generate a tweet” vs “summarize a campaign report”).

PII-safe data: All personal identifiers should be removed or anonymized. If an email said “Contact John at john@example.com for a demo,” our curated version might read “Contact {{name}} at {{email}} for a demo.” The model then learns the pattern without ever seeing the real email or name. This not only protects individuals, but it also stops the model from relying on possibly irrelevant personal clues (like if it saw 100 emails addressed to “Alex”, it might otherwise bias toward that name in outputs).

Balanced and representative sampling: We design the corpus such that different facets of marketing are represented. Some amount of repetition is actually good - e.g., we want the model to see multiple examples of writing an ad headline, since that’s a skill it needs. But we ensure it also sees longer-form strategy documents so it can reason. We also balance recent data vs older data to avoid temporal bias (marketing trends change, but core principles last - the model should learn both the enduring knowledge and be adaptable to recent shifts).

In a nutshell, we want a golden training dataset that captures the essence of marketing communications and strategy, without the noise. Getting to that point required building a data curation pipeline. Instead of one-off scripts, we needed a systematic way to go from raw inputs to this polished corpus. This is where NVIDIA NeMo Curator became our toolbox and framework.

Enter NVIDIA NeMo Curator: Conceptual Overview

What is NVIDIA NeMo Curator?

NVIDIA NeMo Curator is a toolkit purpose-built for tasks like ours: large-scale text (and multimodal) data curation for AI. In simpler terms, it’s a framework that helps you build pipelines to load, clean, filter, and prepare data for training large models. Under the hood, it’s designed for scale - using distributed computing and GPU acceleration (via RAY/Dask) - but it presents a clean, Pythonic pipeline API to developers.

Think of NeMo Curator as a LEGO set for data preprocessing. It provides modular components (stages) for common operations: downloading data, extracting archives, normalizing text, filtering by various criteria, removing duplicates, and more. You assemble these stages into a pipeline, then run it on your data (whether it’s 10 GB or 100 TB). Curator handles the heavy lifting in parallel, making use of GPU resources so that even massive corpora can be processed efficiently. For us, this meant we could scale our curation as our data grew - from a few brands’ data to hundreds of brands - without rewriting everything.

Importantly, NeMo Curator is part of the NVIDIA NeMo ecosystem, which is all about building and managing AI models (especially language models and AI agents). That means it’s not a generic ETL tool, but one tuned for LLM training workflows. It anticipates needs like splitting data into training/validation sets, shuffling and blending different corpora, and keeping track of metadata. It’s also built with quality in mind - a lot of its features exist to ensure the data you feed your model is of high quality, because that directly affects model accuracy. NVIDIA often says “high-quality data processed from NeMo Curator enables you to achieve higher accuracy with less data and faster model convergence” - which we found to be true. By aggressively filtering junk out, our model trained faster and performed better, since it wasn’t wasting capacity on noise.

In summary, NeMo Curator is like our data sommelier: it takes in raw, unfiltered “grapes” of text and helps produce a fine wine of a dataset ready for consumption by our models. It gave us a formal pipeline to replace the patchwork of ad-hoc scripts, making our data processing faster, more agile to change, and easier to maintain.

NeMo Curator’s Text Data Lifecycle

How does NeMo Curator structure the data curation process? Conceptually, it breaks it down into stages that map well to what we needed:

Load (Data Acquisition): Ingest data from various sources. Curator can read from local files, cloud storage buckets, databases, or even scrape web sources. In our case, we primarily load from files and APIs we’ve collected - e.g., CSV exports of ad data, JSON dumps of social posts, HTML from web pages. Curator’s I/O modules help unify these inputs into a common format (like converting everything to a stream of documents or records).

Process (Cleaning & Filtering): This is the core transformation phase, often composed of multiple sub-steps:

Cleaning & Normalization: Fix encoding issues (e.g., weird Unicode from copy-pastes), strip HTML tags, standardize text casing where appropriate, and so on.

Structural filtering: Remove or flag content that’s too short, too long, or non-textual (e.g., an empty field or a blob of base64-encoded image data that somehow snuck in).

Language filtering: If you only want certain languages, identify language and filter out others.

Quality filtering: Apply heuristic rules and ML models to drop low-quality content. For instance, remove documents that have excessive profanity or look like spam, and keep those that match a desired level of quality or domain relevance.

Safety and policy filtering: Remove toxic content, hate speech, or anything against guidelines (especially important if the data is user-generated content).

PII detection & removal: Find any personally identifiable info and redact it (more on this later, but Curator has built-in modules for PII).

Deduplication: After cleaning and basic filtering, Curator can perform de-dup at multiple levels:

Exact deduplication: Remove exact duplicate texts (often via hashing each document).

Fuzzy or near-dup: Identify very similar texts (maybe one has an extra word or minor variation) and remove redundants.

Semantic deduplication: Using embeddings to detect semantically identical content that might not be obviously similar character-wise. For example, two press releases with different wording but essentially the same announcement.

Exclude: we do not apply dedup on A/B testing-like data, as we need those variants.

Generate/Augment: NeMo Curator also supports a synthetic data generation stage (using LLMs to create new data or augment existing data). This is something we are doing carefully, but it’s an advanced topic we won’t focus on here.

Final Assembly: Once the data is cleaned and deduped, the pipeline can shuffle and blend data from different sources, split into train/val/test sets, and then output in the desired format (JSONL, Parquet, etc.). This stage ensures the final output is ready to be consumed by the model training pipeline (e.g., it might output a Hugging Face dataset or just files in storage).

Throughout these stages, NeMo Curator leverages a distributed processing engine. By default it can use RAY to parallelize work across multiple GPUs. So if you have 1,000 files to clean, it can do many in parallel. If some filters use ML models (like a classifier for content quality), those can be accelerated on GPUs. This is crucial for scaling - we can throw more hardware at the problem to cut down processing time.

Why NeMo Curator Fits Marketing Data

Looking at the challenges we listed and the capabilities of NeMo Curator, it’s a great match for a few key reasons:

Volume & Variety: Marketing data isn’t just one big text dump; it’s many pieces from many sources. NeMo Curator’s flexible data source ingestion meant we could plug in all our sources - whether it’s reading a bunch of JSON files or connecting to a cloud bucket of HTML exports. And its ability to handle huge volumes (the toolkit is tested on petabyte-scale data) means as our clients’ data grows, the pipeline keeps up.

Modular Filters for Domain Rules: We had a few custom rules, such as blogs without links, or campaign briefs without examples, and more. These filters apply domain expertise and are some of our best secret sauce. NeMo Curator’s pipeline approach lets us slot in custom Python stages or tweak existing ones to embody these marketing-specific heuristics. Instead of one monolithic script, we have a chain of well-defined steps. Need to change how we define “low-quality social post”? Just adjust to that stage.

Built-in Deduplication: The fact that NeMo Curator provides dedup at scale is a lifesaver. Marketing data has tons of duplicates, and doing robust dedup (especially fuzzy matching) by ourselves on millions of lines would be painful. NeMo Curator leverages efficient algorithms (like MinHash) and even GPU embedding techniques for semantic dedup, which dramatically sped up what would otherwise be a slow process. Deduping ensures our model won’t get confused by repeated texts.

Quality & Safety Filters: NeMo Curator includes ready-made filters for common issues - e.g., removing non-printable characters, filtering by word count, or applying NSFW/toxicity filters (they have classifier-based filters too). For marketing, we obviously want to remove content that is hateful or extremely inappropriate, even if it was present in some social comment. NeMo Curator gave us a starting set of filters that we then augmented with domain knowledge (like filtering out “lorem ipsum” text or SEO spam pages).

PII Removal: One of the standout features for us was the PII removal module. NeMo Curator provides a GPU-accelerated PII detection and redaction component that can identify things like names, emails, phone numbers, addresses, etc., and mask them. It’s even configurable by category - for instance, we can choose to remove emails and phone numbers but maybe not company names, depending on needs. By using this, we didn’t have to reinvent the wheel for privacy compliance. We just feed our text through the PII stage, and we get sanitized output where, e.g., “John Smith” becomes “{{name}}” and “john@example.com” becomes “{{email}}”. This was huge for building trust with clients and ensuring we don’t accidentally train on or output sensitive data.

Pipeline Abstraction = Maintainability: Before NeMo Curator, imagine a pile of scripts: one to clean HTML, another to join CSVs, another to de-dupe via some Python library, etc. That’s hard to maintain or reuse. With Curator, everything is in one pipeline definition. We can version it, test it on small samples, and reuse it for each new client’s data. It’s like having a blueprint for data processing. If we learn a new best practice (say, a better way to filter out automated bot traffic text), we update the pipeline and re-run - voila, new datasets ready.

In short, NeMo Curator was a natural fit to tame our marketing data jungle. It provided the heavy machinery and framework so we could focus on the marketing logic rather than on building an entire data processing system from scratch. Next, let’s look at how we designed our specific pipeline using NeMo Curator, with the objectives and processors tuned for marketing needs.

Designing a NeMo Curator Pipeline for Marketing Data

Pipeline Objectives at Marketeam.ai

When we set out to build our NeMo Curator pipeline, we laid down clear objectives reflecting the “ideal corpus” vision described earlier. These objectives shaped which stages and filters we included. In summary, our pipeline needed to:

Ingest heterogeneous marketing data sources: Be able to load data from CSV, JSON, HTML, etc., coming from ads, analytics, content management systems, and more. We wanted one pipeline to handle all these inputs together or in parallel.

Normalize and clean the text fields: Convert everything to plain text with consistent encoding. Remove HTML tags, JavaScript snippets, or markdown, normalize Unicode characters (e.g., fancy quotes to standard quotes), and fix any common formatting issues.

Enforce language and structural constraints: Since we primarily operate in English (for now), the pipeline should drop content in unsupported languages or flag it for separate handling. Also, remove any empty or nonsense entries and apply length thresholds so extremely short fragments or overly long texts (beyond a reasonable limit) are dealt with.

Filter out low-quality or unsafe content: Integrate both heuristic and ML-based filters to remove content that is spammy, extremely vulgar, or otherwise not something we want the model to learn from. For example, filter out texts that have more than 50% non-alphabetic characters (likely tracking codes or gibberish), or that trigger a toxicity classifier above a certain threshold.

Perform PII detection & redaction: As emphasized, find all personal data and redact it in the text. The pipeline should ensure that by the time we output the dataset, it’s free of things like emails, phone numbers, physical addresses, full names of private individuals, etc.

Deduplicate aggressively: Apply multi-level deduplication (exact and fuzzy) so that the final dataset has minimal redundancy. We set this as a priority to avoid the “echo chamber” effect on the model and to reduce dataset size (for efficiency) without losing unique information.

Enrich or label data where useful: While not altering the raw text meaning, we wanted to carry along some metadata. For instance, if a piece of text came from an “Ad Headline” field vs a “Blog Body”, that can be recorded (even if just in a separate column or filename). This allows future processing or analysis per type. We also attach a brand ID to each data point, so we can later filter or split by brand if needed.

Output structured, ready-to-use datasets: Finally, the pipeline should output the curated corpus in a format ready for training - in our case we often use JSONL (JSON Lines) or Parquet, with each record being a cleaned piece of text and its metadata. We also prepare a small held-out set of data for evaluation, ensuring it’s separated from training data (to avoid contamination).

These objectives made it clear which Curator components we’d need (e.g., the PII module, dedup stages, etc.). Essentially, each bullet above corresponds to one or more pipeline stages that we’ll add. With objectives set, we proceeded to pick and configure the text processors.

Key Text Processors and Their Marketing-Specific Roles

NeMo Curator provides a library of text processing modules, and we supplemented those with a few custom rules. Here are the key processors (stages) we use, and how we tailor them for marketing data:

Text normalization: We use NeMo Curator’s text cleaners to normalize Unicode (fix broken characters, unify quotes and dashes) and to strip out unwanted HTML/XML markup. For example, if a landing page’s text included HTML tags or entities ( , <div>, etc.), the normalizer cleans those so we get just the textual content. We also remove common junk like leftover template markers (e.g., {{ FirstName }} in an email template) because those aren’t useful for the model.

Language detection & filtering: Our pipeline leverages a language ID filter (based on FastText under the hood) to detect the language of each text. We configure it to keep English (since that’s our initial market) and drop other languages or route them for separate handling. This ensures, for instance, that a stray Spanish tweet in the dataset doesn’t confuse an English-only model. The filter uses a confidence threshold, so if it’s not sure about the language, we might choose to drop that text to be safe.

Length and structure filters: We apply a minimum length filter to remove trivially short texts, except where short length is expected. For example, we drop anything under, say, X words unless it’s identified as an ad headline or social post (which can be very short but still meaningful). Conversely, we also flag extremely long text (e.g., a 50,000-character HTML dump) because that likely includes navigation menus or code. Those we either truncate or break up into smaller pieces in a prior step. We also use a non-alphanumeric filter to remove any entry that is, say, 90% symbols/numbers, often not natural language (e.g. a string of product IDs or a block of HTML color codes).

Quality and safety filtering: For safety we integrated a profanity or toxic content filter. NeMo Curator allows classifier-based filters, so we use one of the pre-trained classifiers and a word list to detect toxic language and drop those texts. In a marketing context, we found this mostly removes things like extremely angry customer comments, “dark” online commercials, or off-topic content that isn’t valuable to learn from.

PII detection & redaction: This is a crucial processor in our pipeline. We use the PII recognizer module from NeMo Curator, configured to catch common PII like names, emails, phone numbers, physical addresses, etc. The module uses a combination of pattern matching and an optional LLM-based approach to identify PII. We opt for a conservative approach: anything that looks like an email (something@domain.com) gets replaced with {{email}}, numbers that resemble phone numbers become {{phone_number}}, names get {{name}}, and so on. NeMo Curator’s default PII labels cover a wide range; from usernames to IP addresses to credit card numbers, and we can choose which ones to redact. We ensure that after this stage, the text is safe: e.g., “Call John at 555-1234” → “Call {{name}} at {{phone_number}}”. The redaction placeholders still carry meaning (we know it was a name, a phone, etc.) but the actual personal info is gone.

Deduplication (exact & fuzzy): We incorporate two deduplication stages. First, an exact dedup that hashes each text and drops exact matches. This handles cases like identical emails sent to many people or repeated ad copy. For the second part, we use a “fuzzy” approach - for example, if we have “50% off all items today only!” and “50 percent off all items today only!!”, they are essentially the same message with minor differences - which means the exact math won’t find them similar, but the fuzzy dedup will group them together. We can choose to keep just one, and set a similarity threshold which one of the duplicates is removed. But we found this especially useful for A/B test variants or content that had minor personalization differences. We did reduce the size of the dataset without losing unique information for our domain. As a bonus, this also helped surface truly unique variations that we do want to keep - we could see in logs how many near-duplicates were dropped, giving us a sense of how repetitive some content was.

Metadata enrichment: As a final touch, we enrich the data with a bit of metadata. For instance, we add fields like source (e.g., “GoogleAd” vs “Website”) or content type (ad_headline, ad_body, blog_text, tweet, etc.) as metadata columns. NeMo Curator allows passing through such extra info alongside the text. We also add a brand_id or pseudonym for the brand. This way, the final JSONL or Parquet has not just the cleaned text, but also these tags. This doesn’t directly affect the model training (unless we explicitly use it), but it’s invaluable for analysis and potential future use (e.g., we could condition the model on content type if we wanted to).

By combining these processors, we essentially encode our marketing domain knowledge into the pipeline. The pipeline isn’t just a generic cleaner - it has the smarts to treat marketing-specific content appropriately (like knowing short ad texts are okay, or that PII is prevalent in certain fields).

The blend of NeMo Curator’s tools and our domain rules that results in a high-quality dataset.

Domain Focus: Encoding Marketing Rules into NeMo Curator

To illustrate how we wove domain-specific logic into the Curator pipeline, let’s walk through a couple of concrete examples:

Short Text Handling: A generic filter might say “drop anything under 5 words as it’s probably noise.” But in marketing, a 2-word phrase could be a goldmine (think of Nike’s “Just Do It”). Our pipeline uses metadata to contextually override filters. For instance, we allow very short text if the content_type metadata says it’s an ad_headline or email_subject. On the other hand, if we encounter a 1-2 word text with no context (maybe a tag or a user’s one-word comment like “Thanks”), we drop it. This way, we don’t accidentally lose valuable short content.

Template Text Removal: We identified certain patterns that are essentially template artifacts. For example, a lot of emails end with “If you wish to unsubscribe, click here...” or have boilerplate like “© 2025 CompanyName”. We maintain a small list of regex patterns and exact strings to remove such boilerplate during cleaning. We plugged this into the normalization stage. It’s trivial text for a human, but for an LLM, seeing “unsubscribe” 10,000 times could mis-calibrate its understanding of typical email content. By removing or reducing these, we let the model focus on the core message of emails (the marketing copy).

CRM Notes with PII: Some of our data includes CRM exports where the “notes” field might say something like “Spoke with John Doe on 01/05, follow up next week.” This is semi-structured free text but contains a name and date. Our PII filter will catch “John Doe” and turn it into {{name}}. For dates, we decided to keep them as they are generally not PII by themselves; plus, understanding dates can be useful for the model to learn temporal reasoning. But we did normalize date formats (e.g., everything to YYYY-MM-DD) for consistency.

Channel-specific quirks: Each marketing channel has quirks. In social media text, for example, hashtags are important (#NewProductLaunch conveys a lot). We did not remove hashtags or @mentions entirely; instead, we might separate the # or mask @usernames as {{user_name}} via PII logic (since an @username can be considered personal data). In ad copy, we noticed a lot of variations of “Buy now” or “Click here” - rather than deduping those out (since context matters), we left them but ensured they didn’t overwhelm the corpus by downsampling if needed. For web pages, we gave special treatment to title tags and meta descriptions - we pulled them as separate short texts since those often summarize content and are akin to ad headlines.

Multi-language considerations: While we focus on English, some brands had a bit of content in other languages (say a Spanish version of a landing page). Instead of throwing it out entirely, we actually set aside non-English content in case we train multilingual models later. NeMo Curator’s language ID stage can output the detected language with a confidence, so we used that metadata. Any text with language != 'EN' we route to a separate file. This is a domain decision - a generic project might drop it, but as marketing AI, we anticipate future global needs, so we curate it separately rather than discarding it.

The overarching theme is flexibility. NeMo Curator’s pipeline allowed us to inject these rules and toggles so that the curation process truly understands marketing context. It’s not a one-size-fits-all cleaning; it’s tailored. The benefit is seen downstream - our model isn’t inadvertently biased by, say, seeing “Terms and Conditions” repeated ad nauseam, and it learned to treat short catchy phrases as meaningful, not as outliers.

Now that we’ve conceptually outlined the pipeline, the next section will walk through it step by step in action, effectively showing how data flows through each stage in our marketing data curation pipeline (If you’re not a technical person - feel free to skip it).

Inside the NeMo Curator Text Pipeline: Step-by-Step Walkthrough

To make this concrete, let’s walk through our marketing data curation pipeline stage by stage, following the journey of the data. We’ll use a running example: imagine we have a dataset consisting of a few sources - ads.csv (ad copy and metrics), analytics.json (web analytics events with descriptions), and social_posts.json (social media posts with comments). Here’s how we process them:

Step 1 - Load: Ingesting from the Marketing Stack

Everything begins by loading raw data into the pipeline. We configure NeMo Curator’s readers to pull in our files. For example, we use a CSV reader for ads.csv and JSON readers for the others. The pipeline at this stage creates a stream of documents (internally, perhaps a DocumentDataset or DataFrame). Each document might look like:

Ad record: {"id": "AD123", "text": "50% OFF all shoes - this week only!", "type": "ad_headline", "impressions": 10000, "clicks": 500, ... }

Web analytics record: {"id": "EVT99", "text": "Completed checkout (Step 4 of 4 in Purchase Funnel)", "type": "analytics_event", "users": 134, ... }

Social post record: {"id": "TW456", "text": "Excited to launch our new product! Check it out [link]", "type": "tweet", "likes": 20, "replies": ["Looks great!", "Congrats 🎉"] }

We specify which fields contain the text (text in these examples) and which fields are metadata.

pipeline.add_stage( |

JsonlReader( |

file_paths=["./raw_data/analytics", "./raw_data/social_posts"], |

fields=["text"] |

) |

) |

NeMo Curator will treat the text as the content to process, but it can carry along the metadata fields (id, type, etc.) so we don’t lose that context. At this load stage, not much “happens” to the text - we’re just getting everything into the pipeline’s uniform format (for instance, combining all sources into one flow). This is where we also might add a brand_id tag if not already present (e.g., all these records get a brand_id: ACME_Co if they belong to Acme Co.’s data).

What it solves: Without a unified loading step, it’d be painful to coordinate multiple input sources. Here we simply add multiple reader stages or one composite reader, and the pipeline now “knows” about all our raw documents.

Step 2 - Normalize: Cleaning and Standardizing Text

Next, the data flows into cleaning modules. At this normalize stage, we address all the textual gunk:

We apply a Unicode normalization to fix characters. For example, if an ad text had “50% OFF” but the “OFF” was in some odd full-width characters or the percent sign was a homoglyph, this fixes that. Curly quotes are turned into straight quotes, ensuring consistency.

We strip HTML and XML from any fields. Suppose our web analytics text was extracted from a tooltip that contained HTML like <b>Completed checkout</b> ... - after this stage, we’d have just “Completed checkout (Step 4 of 4 in Purchase Funnel)”. Similarly, if social posts have <br> or & from an export, those are converted to real newlines or '&'.

We remove known boilerplate sections. For instance, let’s say our ads.csv had a column “disclaimer” that often just contains “All rights reserved by CompanyName.” We might drop that part of the text or exclude that column entirely from the pipeline at read time.

We also normalize whitespace - multiple spaces become one, leading/trailing spaces are trimmed, and weird line breaks are smoothed. An example: “50% OFF all shoes - this week only!\n\n” (with a couple blank lines) would become “50% OFF all shoes - this week only!” after normalization.

After this stage, the text content is clean, plain, and standardized. A quick before-and-after example:

Before: <div class="text">🔥 Excited to launch our new product! Check it out here: http://bit.ly/XYZ</div>\n<p>Contact John at john@example.com for details.</p>

After: 🔥 Excited to launch our new product! Check it out here: http://bit.ly/XYZ\nContact {{name}} at {{email}} for details.

(Notice: We showed PII redaction in the “After” already - in practice that happens at Step 5, but here it illustrates how final text looks.)

Normalization lays the foundation so that subsequent filters operate on a clean slate, not on messy raw text.

Step 3 - Language & Basic Structural Filters

Now that we have clean text, we enforce some basic filters to drop content that we know we won’t use:

Language detection: Each document’s text is passed through a language identifier (using FastText under the hood). We get back a language code like “EN” and a confidence score. If the text is not English (and we’re currently only training English models), we remove it from the pipeline. In our example, if one of the social posts was in Spanish, it would be filtered out here. (We might log it or save it elsewhere, but it won’t proceed to training data). If it’s English or possibly multilingual with English, it stays.

Min length filter: We set a rule like “the text must have at least X characters or Y words, otherwise drop it.” We use this to weed out entries like “OK” or “Test” or empty notes that sometimes appear. For example, if a CRM note was just “N/A” or “—”, it’s gone now.

Structure/symbol filter: We apply something like Non-alphanumeric character ratio filter. If a text is, say, over 50% symbols/digits vs letters, it’s probably not natural text. For instance, a chunk like “ID: 12345; REF: abc-999-xyz” is mostly technical gibberish for our purposes. The filter will flag that and we drop it. Another example: a long URL or a string of emoji with no words would be dropped here.

Max length or splits: Although not always needed, we are cautious about extremely long text. Let’s say one of our content assets is a full PDF whitepaper converted to text - maybe 20,000 words. Rather than drop it, in some cases we split long documents into smaller chunks (because extremely long sequences are hard for the model to train on in one go). Curator has a splitter stage if needed. In our case, we rarely had single texts that long, but if we did (like an entire e-book), we’d chunk it by chapters or sections at this stage.

After Step 3, we have ensured that the data is in the right language and is in a reasonable shape (not too short, not pure noise). We haven’t yet applied quality judgments beyond that, but we have thrown out the obvious non-useful bits. For example, maybe 5% of our data (the empty or non-English or trivial stuff) gets cut out here.

Step 4 - Quality and Safety Filtering

This step is all about content quality. Now we ask: is this text something we want the model to learn from?

Heuristic quality filters: One example we use is a WordCountFilter (as shown in NeMo examples) to ensure a minimum word count. We might require at least 3-5 words (post normalization) to keep a text, unless it’s known to be a short-form field (handled as mentioned before). We also ensure some texts aren’t ridiculously long by word count (as a sanity check beyond just character count).

Domain-specific stop words or blacklist: We compiled a small list of things that, if a text contains them, we drop that text. This includes placeholders like “Lorem ipsum” (sometimes found in unfinished web pages or templates), or strings like “BEGIN PGP SIGNATURE” (we found a PGP block in some scraped content). Those indicate the content isn’t actual marketing text. We also exclude any content that looks like coding or logs (e.g., if a text snippet starts with { and has JSON structure, and it wasn’t supposed to, we drop it).

Toxicity and profanity filter: We run each text through a lightweight toxicity detector (Curator can integrate with something like the Perspective API or a local model). If a social comment is full of profanity or hate speech, it’s removed. For instance, if someone left a comment “This brand is $@#&ing terrible and you all suck!”, that’s not going into training. It’s both not useful for marketing tasks and also something we don’t want the model to mirror. We set the threshold reasonably high (we’re okay with mild frustrations or “this sucks” in feedback, but not slurs or extremely abusive content).

Redundancy / low info filter: We sometimes have data where a piece of text is basically just a duplicate of some structured info, like a product code repeated. We already filtered a lot by structure, but as an extra, we had a rule: if after all the cleaning, a text is just a single word or a code that appears nowhere in natural language (like “X3Q5Z”), drop it. This catches any edge cases not caught by earlier filters.

At this point, the quality filter leaves us with data that is topical, mostly well-formed, and aligned with what we consider valuable training data. We may have dropped, say, another 5-10% of content that was deemed too noisy or unsafe. The majority that remains is good stuff - e.g., actual ad copy, real user feedback (minus the nasty ones), marketing emails, etc.

One might wonder: are we overzealous in filtering, might we drop something useful? We try to err on the side of caution for training. The beauty of having a pipeline is we can always relax a filter and re-run if we find we were too strict. For instance, if we noticed we were dropping too many short social comments that were actually benign, we could adjust the thresholds.

Step 5 - PII Detection and Redaction

Now comes one of the most critical steps: scrubbing PII. At this stage, the content is pretty clean and relevant, but it may still contain things like names or emails. We use NeMo Curator’s PII modules to scan each text for any personally identifiable information and mask it out.

Here’s how it typically works in our pipeline:

The PII module goes through the text and finds patterns. If it sees something that looks like an email (regex like \S+@\S+\.\S+), it will replace that substring with {{email}}. Phone numbers (e.g., 555-123-4567 or (123) 456-7890) get replaced with {{phone_number}}. Names are trickier - the module might use a combination of dictionaries and model inference. It can recognize capitalized first-last name pairs in many cases. If it’s confident “John Smith” is a name, that becomes {{name}}.

We also consider IDs: a customer ID like CID: 789123 could be sensitive. If it fits a known pattern, we might redact it as {{customer_id}} or simply remove it. Same for things like addresses, which often look like “123 Main St” (goes to {{address}}).

URLs can be a privacy concern if they contain tracking info or user IDs. We decided to keep URLs if they are generic (like a link to the company site) but if a URL contains an email or ID parameter, we either strip the query parameters or replace the whole URL with a placeholder. E.g., http://example.com/welcome?email=john@example.com would be redacted in the part after email=.

After redaction, we double-check that the placeholders themselves aren’t confusing the model. They’re wrapped in double braces {{...}}, which is a pattern we know and can instruct the model to treat as “placeholder”. In fact, having them consistently formatted helps the model learn a pattern like “{{email}}” means some email was there. We’ve found this consistency important - Curator uses exactly this convention in its defaults[8], which was convenient.

By the end of this step, the dataset is privacy-safe. We can be confident we’re not leaking someone’s contact info or personal details in the training set. For example, a text that originally was: “Hi Jane Doe, your order 12345 has shipped. Track at ups.com/track?ID=ABC123. Call (555) 678-9101 for support.” would now look like: “Hi {{name}}, your order {{unique_identifier}} has shipped. Track at ups.com/track?ID={{tracking_number}}. Call {{phone_number}} for support.” (We took liberty to assume even the order ID and tracking might be considered sensitive or at least unique identifiers to mask). This still reads coherently for an AI - it can learn that a typical shipping notice has a name, an order number, a tracking link, and a phone number - but it doesn’t know the specific ones.

For our marketing AI use case, this was absolutely essential, because we often deal with CRM and email data where such personal references are common. We can’t risk the model learning them or outputting them. NeMo Curator’s capabilities here saved a ton of manual effort and worry.

Step 6 - Deduplication: Exact and Fuzzy

With PII out of the way, we tackle duplication. By now, we have a lot of cleaned, good content - but as noted, marketing data has repeated. Deduplication in our pipeline is typically a two-pass process:

Exact deduplication: We compute a hash (MD5 or similar) for each text (after normalization and PII masking). If two records have the exact same hash, we drop one as a duplicate. For example, if the exact same product description was used for 100 products, we keep it once. Curator can do this efficiently at scale. This step is straightforward and removes identical copies.

Fuzzy-text deduplication: We then use a fuzzy match algorithm to catch things that are almost the same. One approach Curator supports is MinHash/LSH to cluster texts with high Jaccard similarity. Another way would be using embeddings (like Sentence Transformers) to find texts that are very close in meaning - this is called semantic deduplication. We used a Fuzzy text approach, but for long texts a more aggressive approach in merging near-duplicates is needed.

Example scenario: We had two ads: - Ad A: “50% off all shoes this week only!” - Ad B: “50% off all shoes - this week only!” - notice the only difference is a minor punctuation change.

After deduplication, our dataset shrinks - often substantially. We observed, for instance, that a lot of email content had 30% duplicates due to recurring newsletter intros and outros. Deduping not only helps model training, but it also reduces storage and speeds up training (less redundant data to process). We log how many duplicates we dropped, to inform our team and also as a sanity check (if we suddenly dropped 90%, maybe the dedup was too aggressive or something went wrong).

By the end of this stage, every piece of text in the dataset is (to the best of our automated ability) unique in content. This means each training example is teaching the model something at least slightly new.

Step 7 - Dataset Assembly and Splits

Finally, with a cleaned and deduped corpus in hand, we assemble the output and create the necessary splits for training and evaluation:

Shuffling and blending: We often intermix data from different sources at this stage. For example, we don’t want all ad texts to appear in one chunk and all blog texts in another in the final output, as that could bias how the model sees sequences during training. So we shuffle the order of documents globally. NeMo Curator can do blending of multiple input streams; since we already combined sources early on, a final shuffle suffices.

Train/Validation/Test Split: We carve out a portion of the data for validation and test. A common practice is an 80/10/10 split. But we have to be careful: splitting needs to consider the structure. For example, we don’t want texts from the same campaign appearing in both train and test (to properly evaluate generalization). We might do the split by grouping by a key (like brand or time). One approach we use is a time-based split for evaluation: hold out the most recent 10% of data (by timestamp) as a test set, so we can see how the model does on “future” data given it was trained on “past” data. This is great for temporal domains like marketing where trends change.

Output format: We then output the curated dataset. In our case, we often output to a JSON Lines (JSONL) file or a Parquet file. Each line (or each row) contains the cleaned text and possibly some metadata fields like brand_id, content_type. For example:

{"brand_id": "ACME_Co", "content_type": "ad_headline", "text": "50% OFF all shoes \u2013 this week only!"}{"brand_id": "ACME_Co", "content_type": "email_body", "text": "Hi {{name}}, your order {{unique_identifier}} has shipped. ..."}{"brand_id": "ACME_Co", "content_type": "tweet", "text": "Excited to launch our new product! Check it out here: ... #NewArrivals"}

These outputs are stored in something like curated_marketing_corpus_train.jsonl and similarly ..._val.jsonl, ..._test.jsonl. If needed, we can also produce multiple corpora (maybe one per brand or one per content type) depending on training strategy. But usually a combined corpus with metadata works for us.

Verification: As a last step, we often run some quick checks on the output: e.g., search for any leftover @ or emails to ensure PII is truly gone, or scan for any obviously wrong data. NeMo Curator pipelines are deterministic given the same input, so we can regenerate if we need to tweak something.

After step 7, we have our final curated marketing datasets. From a jumble of raw files to a neat package of polished text data, the pipeline has done its job. The result is ready to be fed into our model training pipelines (be it for pre-training, fine-tuning, or evaluation scripts).

To solidify understanding, the next section will present a code example of this pipeline, showing how we define it using NeMo Curator’s Python API.

A Full NeMo Curator Text Pipeline for Marketing: Code Example

Let’s look at a simplified (but illustrative) code example of how we define our NeMo Curator pipeline in code. This example will demonstrate a pipeline that reads marketing data, applies cleaning, filtering, PII redaction, deduplication, and writes out the curated dataset. We’ll use Python pseudo-code inspired by the NeMo Curator API for clarity:

from nemo_curator.pipeline import Pipeline

from nemo_curator.stages.deduplication.fuzzy import FuzzyDeduplicationWorkflow

from nemo_curator.stages.deduplication.id_generator import CURATOR_DEDUP_ID_STR

from nemo_curator.stages.function_decorators import processing_stage

from nemo_curator.stages.text.deduplication import TextDuplicatesRemovalWorkflow

from nemo_curator.stages.text.filters import FastTextLangId, NonAlphaNumericFilter, WordCountFilter

from nemo_curator.stages.text.io.reader import JsonlReader

from nemo_curator.stages.text.io.writer import JsonlWriter

from nemo_curator.stages.text.modifiers import UnicodeReformatter

from nemo_curator.stages.text.modules import Modify, ScoreFilter

from custom_filters import ProfanityFilter, RegExFilter

from custom_modifiers import HtmlStripper

from custom_pii_redactor import PiiRedactor

pipeline = Pipeline(

name="marketing_data_curation",

description="Pipeline to clean, filter, and prepare marketing text data"

)

pipeline.add_stage(

JsonlReader(

file_paths=["./raw_data/analytics", "./raw_data/social_posts"],

fields=["text"]

)

)

pipeline.add_stage(Modify(HtmlStripper()))

pipeline.add_stage(Modify(UnicodeReformatter()))

pipeline.add_stage(

ScoreFilter(

FastTextLangId(model_path="./lid.176.ftz", min_langid_score=0.7),

score_field="language",

)

)

@processing_stage(name="NonEnglishFilter")

def non_english_filter(task):

return task

pipeline.add_stage(non_english_filter)

pipeline.add_stage(

ScoreFilter(WordCountFilter(min_words=3, max_words=1000))

)

pipeline.add_stage(

ScoreFilter(NonAlphaNumericFilter(max_non_alpha_numeric_to_text_ratio=0.5))

)

pipeline.add_stage(

ScoreFilter(ProfanityFilter())

)

pipeline.add_stage(

ScoreFilter(RegExFilter(patterns=[r"lorem ipsum", r"Lorem Ipsum"]))

)

pipeline.add_stage(

PiiRedactor(labels=["email", "phone_number", "name", "address"])

)

pipeline.add_stage(JsonlWriter(path="./intermediate_results"))

fuzzy_deduplication_workflow = FuzzyDeduplicationWorkflow(

cache_path="./fuzzy_cache_path",

output_path="./fuzzy_outputs",

input_path="./intermediate_results",

input_filetype="jsonl",

input_blocksize="512MiB",

text_field="text",

)

= fuzzydeduplication_workflow.run()

fuzzy_removal_workflow = TextDuplicatesRemovalWorkflow(

input_path="./intermediate_results",

ids_to_remove_path="./fuzzy_outputs/FuzzyDuplicateIds",

output_path="./fuzzy_deduped_dataset",

input_filetype="jsonl",

input_blocksize="512MiB",

ids_to_remove_duplicate_id_field=CURATOR_DEDUP_ID_STR,

id_generator_path="./fuzzy_outputs/fuzzy_id_generator.json",

output_filetype="jsonl",

)

= fuzzyremoval_workflow.run()

This pipeline, when run, will print logs or a success message. The output will contain our clean, domain-tuned marketing data, ready for use.

(Note: The code above is a simplified illustration. In a real setup, some stage names or parameters may differ due to updates/versions. NVIDIA’s documentation provides precise class names and usage. The overall structure would be similar to what’s shown)

From Curated Data to Domain-Specific LLMs and SLMs for Marketing

How Curated Data Feeds Markethinking and SLMs

With our curated dataset in hand (thanks to NeMo Curator), the next step is to use it to train and fine-tune our domain-specific LLMs and SLMs. For Markethinking - our primary marketing LLM - the curated data is used in a couple of ways:

Domain-Adaptive Pretraining: We take a pretrained base model (for example, a 20B parameter general LLM that knows English well) and continue its training on our curated marketing corpus (this is often called continued pretraining or domain-adaptive pretraining). By exposing the model to millions of marketing-specific tokens - from ad copy to strategy docs - we imbue it with marketing domain knowledge. Essentially, the model’s weights adjust to better predict marketing text. For instance, it learns that the word “conversion” is often followed by “rate” in our data, or that “CTR” means “click-through rate” and usually comes with a percentage. This makes the model naturally fluent in marketing speak.

Supervised Fine-Tuning for Tasks: Beyond just unsupervised pretraining, we also use curated data to create supervised fine-tuning datasets for specific tasks. For example, we might assemble Q&A pairs from marketing FAQs, or instruction-following data where the prompt is a marketing task (“Draft a social post about X using our brand tone”) and the response is a human-written ideal output. Our curated corpus helps generate these because it contains authentic examples to draw from. We can prompt the base model with real marketing scenarios from the data and have humans refine the output to create a high-quality fine-tuning set.

Training SLMs (Smaller models): For smaller models, we might not do a two-phase approach (pretrain then fine-tune) due to resource constraints. Instead, we might train an SLM from scratch or from a smaller checkpoint primarily on the curated marketing data (plus some general data if needed). Because the curated data is relatively focused and high-quality, a smaller model can still achieve good performance - it’s not diluted by garbage or off-topic data. We found that a model with, say, 500M parameters, trained mostly on marketing text, can outperform a generic 2B model when it comes to marketing tasks. This is consistent with observations in other domains that a targeted dataset can beat sheer size of a model.

The closed-loop nature of our product (autonomous agents that continuously learn) also benefits from curated data. After initial training, when our agents are deployed, they generate new data (e.g., new campaign results, new content they wrote). We periodically take that new data, curate it (using the same pipeline), and fine-tune or refresh the models. NeMo Curator’s efficiency means we can rebuild an updated corpus quickly, enabling faster model iteration cycles. In a way, we have a data flywheel: more usage leads to more data, which via Curator leads to better models, which drives more usage.

Evaluation with Marketing-Specific Metrics

Training models is half the battle; we also place heavy emphasis on evaluation, and our curated data plays a role here too. How do we know our Marketing AI is good? We evaluate on tasks and criteria that matter in marketing:

Task-based evaluation: We create specific evaluation sets from our corpus for tasks like:

Ad copy generation: We might take some ads from our data (which have ground-truth performance metrics) and task the model to generate an ad for the same product, then have marketers judge if the model’s version is as compelling.

Budget recommendation or strategy planning: We have historical scenarios (e.g., “Given last month’s metrics, what should we do next?”) with known outcomes. We see if the model’s suggestions align with what successful human marketers did.

Content summarization: The model might need to read a campaign report and summarize the key learnings. We use real past reports and compare the model’s summary to a human-written summary.

These eval tasks use data drawn from our curated sets but kept separate (the validation/test splits). Because we curated the data, we trust that if the model performs well on the eval set, it’s not due to seeing leaked answers (we did decontamination in NeMo Curator by isolating test data). It’s genuinely learned. - Human-in-the-loop evaluation: For subjective aspects like brand voice consistency or creativity, automated metrics only go so far. We involve our marketing experts or even clients in evaluating outputs. They’ll use a rubric - does the content match the brand’s tone? Is the strategy plausible and data-backed? - and score the model. Consistently high scores in these human evals indicate the model is effectively using its domain knowledge (which stems from the data it saw). - Factual accuracy and reasoning: Marketing involves data (numbers, dates) and causal reasoning (e.g., if CPC went up and conversions went down, ROI likely dropped). We test the model on scenarios to see if it correctly identifies trends and doesn’t hallucinate numbers. Because our training data includes a lot of real metric relationships, the model should, for example, know typical CTR ranges, or that increasing impressions can lower CPC (given fixed budget). We sometimes generate synthetic tests, like giving the model a small table of metrics and asking questions - a domain-specific reading comprehension.

We’ve found that having a high-quality curated dataset is a prerequisite for reliable evaluation. If the model were trained on noisy data, evaluation would be tricky because the model might output inconsistent or off-base answers. By training on curated data, the model’s outputs are more stable and interpretable, which makes it easier to evaluate. For example, a model trained on clean data is much less likely to blurt out an email address or some nonsense metric - so if it does output a number or recommendation, we can focus evaluation on whether that was logically correct, not waste time filtering out garbage outputs.

Our evaluation has shown that Markethinking, after being domain-adapted, significantly outperforms generic models on marketing tasks (no surprise, similar to how BloombergGPT shines on finance). We measure things like task success rate, reduction in hallucinations, and user satisfaction. All metrics moved in the right direction after we introduced the curated training data pipeline.

Impact on Autonomous Marketing Agents

Finally, it’s worth connecting the dots to the end product: our autonomous marketing agent “team.” The improvements in data and model directly translate to tangible benefits in these agents:

Better grounding in brand data: Because each brand’s agent is trained/fine-tuned on that brand’s curated data slice (remember, we isolate each brand’s historical data), the agent’s responses are deeply grounded. For instance, if a brand always speaks in a playful tone and never mentions competitors, the agent picks up on that. If a certain strategy failed last year for that brand (and that data was in the corpus), the agent is less likely to suggest the same mistake.

Fewer hallucinations and more factual accuracy: Our domain-specific model is less likely to hallucinate marketing facts. If asked “What was our best performing channel last month?”, the agent can give a reasonable answer like “Our Google Ads had the highest ROI, roughly 5.2, which was better than Facebook’s ROI of ~4.5” rather than a generic or made-up statement. This comes from training on actual performance text and data points. The model has essentially read thousands of such performance summaries, so it learned the pattern of referring to actual metrics.

Consistent reasoning across channels: One impressive effect we noticed: since the model has seen unified data across channels (ads, social, web, email all together), it can reason in a more integrated way. An agent might say: “We saw the email campaign boosted site traffic (web analytics confirms a spike on the send date), which in turn helped our retargeting ads (as those visitors were later retargeted on Facebook, improving conversions).” This kind of holistic reasoning is exactly what we want - it’s like having a marketer who isn’t siloed. The curated data made such cross-reference possible, because in training it saw content and outcomes from all these channels in one timeline.

Adaptability and learning: As the agents operate, they also generate new data (new ads, new results). We feed that back through NeMo Curator (with perhaps some modifications for real-time or streaming use) to update the model periodically. This closes the loop: the model doesn’t staticly freeze on last year’s data; it continues to learn. And since we’ve automated the curation, we can do this retraining or fine-tuning more frequently. The result is agents that stay up-to-date with the latest trends (e.g., if a new social platform slang emerges, once it enters the data and is curated, the model adapts to it).

Overall, the investment in domain-specific LLM training with curated data has paid off in making our autonomous marketing agents truly effective co-workers. They not only generate text, but also understand the meaning behind the data they see, enabling them to make strategic decisions.

—

Outcomes and Lessons for AI Startups

Qualitative Outcomes of Using NeMo Curator

After implementing NVIDIA NeMo Curator in our data pipeline, Marketeam.ai saw several positive outcomes:

Reliability and Domain-grounding: Our Marketing AI’s outputs became noticeably more reliable and on-point. The model would reference specific marketing concepts correctly and seldom drift into irrelevant territory. For example, when asked to analyze why a campaign underperformed, it would correctly discuss factors like targeting or creative fatigue, using the kind of reasoning found in our curated data, rather than guessing or producing a generic answer. This reliability is a direct result of training on domain-grounded data - the model isn’t just a generic text generator; it’s a marketing analyst in its knowledge base.

Reduction in Noise and Hallucinations: We saw a large reduction in the “weird” outputs that sometimes plague language models. Previously, if the model was unsure, it might hallucinate a statistic or event (like “a 15% CTR drop due to server downtime” out of thin air). Post-curation, such hallucinations dropped significantly. If the model doesn’t know something, it’s more likely to respond with uncertainty or ask for data, rather than make it up. The noise in outputs (unrelated sentences, off-brand tone) also reduced, because we stripped out noisy input data that could have taught the model bad habits.

Faster Iteration Cycles: With NeMo Curator handling the heavy lifting of data processing, our team could iterate on new data sources or new filtering ideas much faster. What used to take days of manual cleaning now takes hours or minutes with an automated pipeline. This means when we onboard a new client and get a dump of their marketing data, we can run it through NeMo Curator the same day and start fine-tuning a model the next. Faster data readiness led to faster model improvements, which is crucial for a startup trying to ship updates quickly.

Agility in Adjusting Pipelines: Marketing is a dynamic domain - suddenly everyone’s talking about a new TikTok trend, or a new privacy regulation comes and we need to adjust data. Because our pipeline is modular, adding a new rule (say, filter out “#TikTokChallenge” posts or mask a new form of ID) is straightforward. We just update the relevant stage in NeMo Curator and re-run. The agility we gained in tweaking data curation means we can respond to domain changes or discovered data issues without overhauling everything.

Maintainability and Team Confidence: Over time, data processing scripts can become a rat’s nest that only one engineer understands. By standardizing on NeMo Curator, we ended up with a clear, documented pipeline that any data engineer in our team can read and modify. It’s more maintainable, and because NeMo Curator is a supported toolkit, we also get updates and best practices from NVIDIA’s side (almost like having an extended team). This has reduced technical debt and increased the team’s confidence in the data. People trust that the training data going into models is the best we can have, which in an AI company, is a big deal.

Quantitatively, we also noticed that our models reached target accuracy or loss levels with less data after curation. Training was faster (not wading through junk) and we could actually train on fewer tokens to get the same or better performance than before. This aligns with NVIDIA’s note that high-quality data yields higher accuracy with less training data. It’s not just a slogan; we saw it play out.

What We’d Do Again: Best Practices

Looking back, there are several best practices and hard-earned lessons from implementing data curation early and often. Other AI startups might find these useful:

Invest in Data Curation Early: It might be tempting to focus only on model architecture or fancy algorithms, but we’d absolutely prioritize data quality from day one again. Even a smaller model trained on good data will beat a bigger model on garbage data. If we were advising a new AI venture (whether in marketing or another domain), we’d say: set up a solid data pipeline and cleaning process as one of your first milestones. It’s a force multiplier for everything else.

Treat Domain Knowledge as First-class: Our pipeline encodes marketing knowledge (like what to keep or drop). This was only possible by having marketing experts and engineers collaborate closely. We sat down with marketers to list “things you always ignore or clean up manually” and turned those into pipeline rules. Domain-specific heuristics and rules can dramatically tailor an AI’s effectiveness. So, involve domain experts in designing the curation process. In practice, a simple rule (“always remove internal email footers”) can boost your model because it stops learning irrelevant stuff.